Web-based AI tools have rapidly become the default way users interact with advanced technologies such as content generation, design automation, analytics, and decision support. As these tools move entirely into the browser, consistency across environments becomes a foundational requirement. When features behave differently across Chrome, Safari, Edge, or Firefox, users don’t see a technical limitation they see unreliability.

This is why cross browser testing plays a direct role in shaping user trust, even though most users are never aware it exists.

For AI-driven platforms, browser behaviour influences everything from interface rendering to workflow completion. Without proper cross browser testing, small inconsistencies surface in front of users at critical moments during content creation, exports, previews, or saves.

These disruptions interrupt confidence and introduce doubt, which is especially damaging for tools that users rely on for accuracy and repeatability.

Why Trust Matters More for AI Tools Than Traditional Web Apps

Trust has always been important in software, but AI tools raise the stakes. Users are often relying on systems they do not fully understand to produce outputs that affect business decisions, creative work, or public-facing content. This makes predictability and stability essential.

When an AI platform behaves inconsistently, users question more than usability. They question:

- Whether the output is correct

- Whether the system is stable

- Whether results can be reproduced

- Whether the tool is safe to depend on

In AI contexts, interface failures quickly become trust failures.

Read more Postscript Review

The Browser as the Primary AI Experience Layer

Most modern AI platforms are delivered entirely through the web. Users access powerful models, generation engines, and workflows without installing native software. This convenience, however, places enormous responsibility on the browser layer.

Modern AI platforms increasingly deliver advanced capabilities such as media generation, data processing, and real-time interaction entirely through the browser. As a result, rendering engines, JavaScript execution, and media handling play a critical role in shaping the user experience.

Differences in browser engines affect:

- Layout and visual rendering

- Media preview and playback

- Client-side performance

- Memory handling during heavy tasks

When these differences are not accounted for, users experience inconsistency—even when the AI itself is functioning correctly.

How Browser Inconsistencies Erode User Trust

Browser compatibility issues undermine trust in several predictable ways.

- Inconsistent Visual Output

AI tools often produce visual or media-based results. If layouts shift, previews break, or generated assets display incorrectly in certain browsers, users perceive the platform as unreliable or unfinished.

- Feature Availability Gaps

When tools work in one browser but partially fail in another, users lose confidence. Missing buttons, disabled actions, or unsupported features feel arbitrary and confusing.

- Performance Discrepancies

AI workflows can be resource-intensive. Variations in browser performance lead to slower generation times, laggy interactions, or failed exports. Users interpret this as poor platform quality, not browser variance.

- Silent Failures

The most damaging issues are those that fail quietly actions that appear successful but don’t complete correctly. These moments break trust immediately.

The Psychology of Consistency and Confidence

Users associate consistency with competence. When a platform behaves the same way every time, across environments, it feels trustworthy. When behaviour changes based on browser choice, uncertainty creeps in.

Over time, users adapt by:

- Limiting usage to “safe” actions

- Avoiding advanced features

- Reducing reliance on the tool

- Looking for alternatives

This behavioural shift often happens long before churn becomes visible in analytics.

Read more TurboLearn AI Review

Browser Issues and Perceived AI Quality

An overlooked consequence of browser inconsistency is its effect on how users perceive the AI itself. Users do not separate the interface from the intelligence behind it. If the interface fails, the AI is blamed.

As a result:

- Output accuracy is questioned

- Results feel less dependable

- The platform appears less intelligent

In reality, the AI model may be performing flawlessly. But perception defines trust.

The Business Impact of Trust Erosion

Loss of trust due to browser compatibility issues has direct and indirect costs.

Slower Adoption

New users encountering early friction are less likely to commit to the platform.

Reduced Engagement

Existing users limit their interactions, reducing overall value and stickiness.

Increased Support Load

Browser-specific bugs generate difficult-to-diagnose support tickets.

Reputation Damage

Public reviews citing “bugs” or “glitches” discourage future users, regardless of root cause.

In competitive AI markets, these costs accumulate quickly.

Why AI Platforms Are More Exposed to Browser Risk

AI platforms are especially vulnerable because they often involve:

- Advanced front-end frameworks

- Heavy client-side processing

- Media rendering and previews

- Real-time interactions

- Complex state management

Small browser differences can cascade into major user-visible issues. Traditional QA approaches that focus only on core functionality often miss these edge cases.

Cross-Browser Validation as a Trust Strategy

For AI platforms, ensuring consistent behaviour across browsers is not just about usability it is about credibility. Trust is reinforced when users know the platform will behave predictably regardless of environment.

This is where cross-browser validation becomes a strategic function. By identifying inconsistencies early, teams prevent trust-damaging experiences from reaching users.

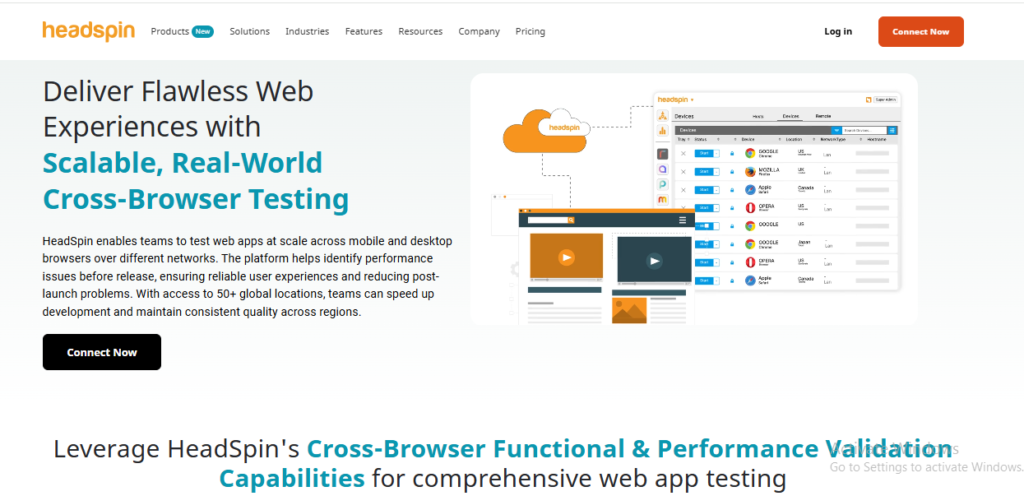

Solutions such as HeadSpin enable teams to evaluate real-world browser behaviour across devices and conditions, helping AI platforms maintain reliability as they scale.

Browser Compatibility as a Competitive Signal

Users rarely praise browser compatibility, but they immediately notice its absence. A web-based AI tool that works seamlessly across browsers feels mature, reliable, and intentionally designed. When users can switch environments without friction, confidence builds naturally and usage feels effortless rather than cautious.

Consistency signals:

- Attention to detail

The platform has been thoroughly validated, not rushed to market. - Investment in quality

The team prioritises stability and user experience, not just feature velocity. - Respect for user environments

Users are not forced into specific browsers or devices to access core functionality. - Operational maturity

The product is capable of supporting real-world usage at scale. - Long-term reliability

The platform is built to remain dependable as browsers, devices, and user needs evolve.

These signals quietly reinforce trust over time, influencing adoption, retention, and brand perception without requiring explicit messaging.

Measuring the Trust Impact of Browser Issues

Organizations that prioritise user trust actively connect browser behaviour with real user outcomes. Rather than treating compatibility issues as isolated bugs, they analyse how browser-specific behaviour influences engagement, retention, and perception.

Key indicators include:

- Drop-off rates by browser

Higher abandonment in specific browsers often signals hidden compatibility or performance issues. - Feature usage discrepancies

Inconsistent usage patterns can reveal features that fail or degrade in certain environments. - Session abandonment patterns

Sudden exits during key workflows often correlate with browser-specific friction. - Support tickets linked to environment issues

Repeated complaints tied to browsers or operating systems indicate systemic experience gaps. - Review language mentioning instability

Terms like “buggy,” “glitchy,” or “unreliable” often point to unresolved compatibility problems.

Together, these signals help teams identify where browser behaviour is quietly eroding trust before churn becomes visible.

Best Practices for Protecting User Trust

AI platforms that succeed long term adopt a trust-first mindset.

Treat Browsers as Primary Environments

No major browser should be considered secondary.

Validate Full Workflows

Generation, editing, exporting, and saving must work consistently everywhere.

Monitor Continuously

Browser updates can introduce new issues overnight.

Prioritise User-Visible Consistency

Even small differences can have outsized trust impact.

FAQs: Browser Compatibility and AI Trust

Why do users blame the platform instead of the browser?

Users experience the product as a whole and assign responsibility to the provider.

Can browser issues affect perceived AI accuracy?

Yes. Interface failures often lead users to doubt the intelligence behind the system.

Is browser compatibility more critical for AI tools?

Often yes, due to complexity and reliance on trust in unseen processes.

Does recommending one browser solve the problem?

No. Users expect flexibility and consistency across environments.

Can proactive testing prevent trust loss?

Yes. Identifying issues before users encounter them is the most effective strategy.

Read more Gamma AI Review

Conclusion

Browser compatibility plays a decisive role in how users evaluate web-based AI tools. Inconsistent behaviour across browsers introduces doubt, disrupts workflows, and quietly erodes trust regardless of how advanced the underlying AI may be. For platforms that depend on user confidence, these signals matter.

AI teams that prioritise consistent browser experiences protect both usability and credibility. By validating real-world behaviour and addressing inconsistencies early, they reduce friction and reinforce reliability. As browser-delivered AI continues to dominate, platforms that invest in experience validation supported by solutions like HeadSpin are better positioned to earn and sustain user trust over time.